Showing

- docs/source/pipelineoverview_old.rst 24 additions, 0 deletionsdocs/source/pipelineoverview_old.rst

- docs/source/polalign.png 0 additions, 0 deletionsdocs/source/polalign.png

- docs/source/polalign_amp_polXX.png.REMOVED.git-id 1 addition, 0 deletionsdocs/source/polalign_amp_polXX.png.REMOVED.git-id

- docs/source/polalign_ph_polXX.png.REMOVED.git-id 1 addition, 0 deletionsdocs/source/polalign_ph_polXX.png.REMOVED.git-id

- docs/source/polalign_ph_poldif.png.REMOVED.git-id 1 addition, 0 deletionsdocs/source/polalign_ph_poldif.png.REMOVED.git-id

- docs/source/polalign_rotangle.png.REMOVED.git-id 1 addition, 0 deletionsdocs/source/polalign_rotangle.png.REMOVED.git-id

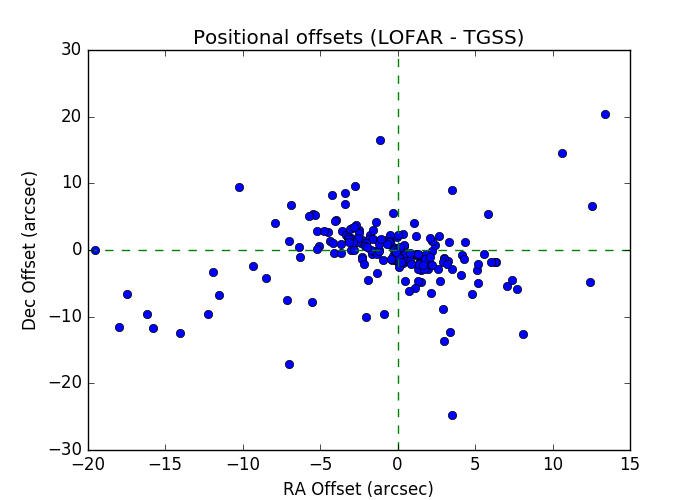

- docs/source/positional_offsets_sky.png 0 additions, 0 deletionsdocs/source/positional_offsets_sky.png

- docs/source/prefactor_CWL_workflow_sketch.png 0 additions, 0 deletionsdocs/source/prefactor_CWL_workflow_sketch.png

- docs/source/prefactor_workflow_sketch.png 0 additions, 0 deletionsdocs/source/prefactor_workflow_sketch.png

- docs/source/preparation.rst 31 additions, 0 deletionsdocs/source/preparation.rst

- docs/source/running.rst 126 additions, 0 deletionsdocs/source/running.rst

- docs/source/running_old.rst 99 additions, 0 deletionsdocs/source/running_old.rst

- docs/source/structure.png 0 additions, 0 deletionsdocs/source/structure.png

- docs/source/target.rst 338 additions, 0 deletionsdocs/source/target.rst

- docs/source/target_field.png 0 additions, 0 deletionsdocs/source/target_field.png

- docs/source/target_old.rst 210 additions, 0 deletionsdocs/source/target_old.rst

- docs/source/targetscheme.png 0 additions, 0 deletionsdocs/source/targetscheme.png

- docs/source/tec.png 0 additions, 0 deletionsdocs/source/tec.png

- docs/source/unflagged_fraction.png 0 additions, 0 deletionsdocs/source/unflagged_fraction.png

- docs/source/uv-coverage.png 0 additions, 0 deletionsdocs/source/uv-coverage.png

docs/source/pipelineoverview_old.rst

0 → 100644

docs/source/polalign.png

0 → 100644

361 KiB

docs/source/positional_offsets_sky.png

0 → 100644

38 KiB

149 KiB

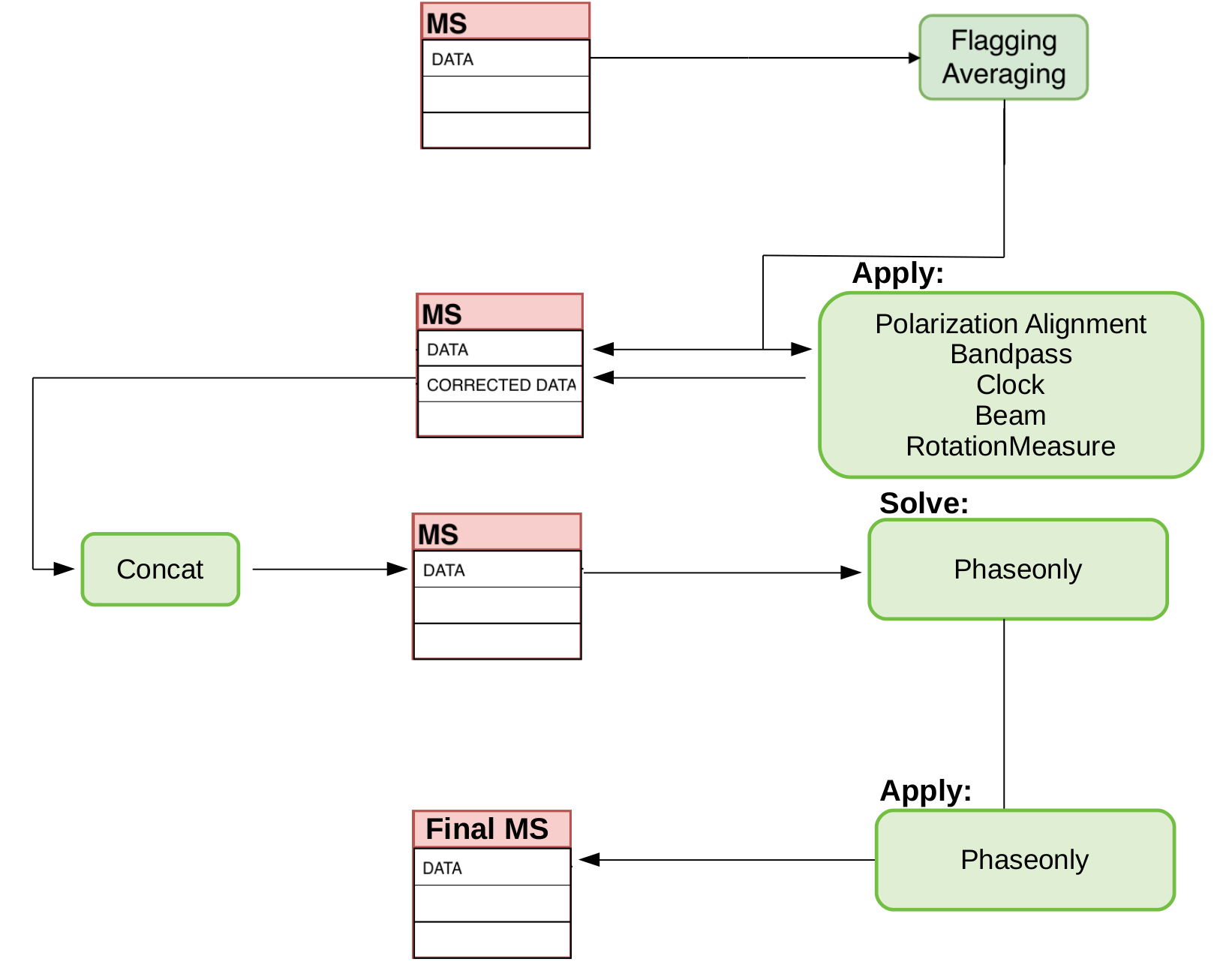

docs/source/prefactor_workflow_sketch.png

0 → 100644

146 KiB

docs/source/preparation.rst

0 → 100644

docs/source/running.rst

0 → 100644

docs/source/running_old.rst

0 → 100644

docs/source/structure.png

0 → 100644

43.5 KiB

docs/source/target.rst

0 → 100644

docs/source/target_field.png

0 → 100644

646 KiB

docs/source/target_old.rst

0 → 100644

docs/source/targetscheme.png

0 → 100644

101 KiB

docs/source/tec.png

0 → 100644

158 KiB

docs/source/unflagged_fraction.png

0 → 100644

19.1 KiB

docs/source/uv-coverage.png

0 → 100644

117 KiB